Preface

The determinant of a square n×n matrix is calculated as the sum of n! terms, where every other term is negative (i.e. multiplied by -1), and the rest are positive. For the The determinant is a special scalar-valued function defined on the set of square matrices. Although it still has a place in many areas of mathematics and physics, our primary application of determinants is to define eigenvalues and characteristic polynomials for a square matrix A. It is usually denoted as det(A), det A, or |A| and is equal to (-1)n times the constant term in the characteristic polynomial of A. The term determinant was first introduced by the German mathematician Carl Friedrich Gauss in 1801. There are various equivalent ways to define the determinant of a square matrix A, i.e., one with the same number of rows and columns. The determinant of a matrix of arbitrary size can be defined by the Leibniz formula or the Laplace formula.

Return to computing page for the first course APMA0330

Return to computing page for the second course APMA0340

Return to Mathematica tutorial for the first course APMA0330

Return to Mathematica tutorial for the second course APMA0340

Return to the main page for the first course APMA0330

Return to the main page for the second course APMA0340

Return to Part I of the course APMA0340

Introduction to Linear Algebra with Mathematica

Glossary

Determinants

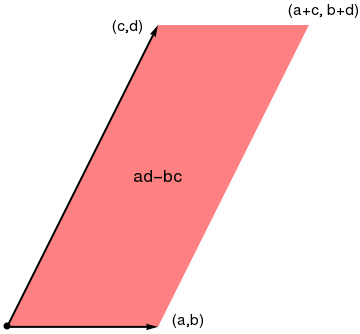

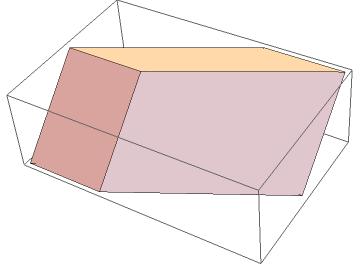

The determinant of a square n×n matrix A is the value that is calculated as the sum of n! terms, half of them are taken with sign plus, and another half has opposite sign. The determinant of a 2×2 matrix is the area of the parallelogram with the column vectors and as two of its sides. Similarly, the determinant of a 3×3 matrix is the volume of the parallelepiped (skew box) with the column vectors, as three of its edges. When the matrix represents a linear transformation, then the determinant (technically the absolute value of the determinant) is the "volume distortion" experienced by a region after being transformed.

|

|

The Laplace expansion, named after Pierre-Simon Laplace, also called cofactor expansion, is an expression for the determinant |A| of an n × n matrix A. It is a weighted sum of the determinants of n sub-matrices of A, each of size (n−1) × (n−1). The Laplace expansion as well as the Leibniz formula, are of theoretical interest as one of several ways to view the determinant, but not for practical use in determinant computation. Therefore, we do not pursue these expansions in detail.

If we denote by Cij = (−)i+jMi,j the cofactor of the (i, j) entry of matrix \( {\bf A} = \left[ a_{i,j} \right] , \) then Laplace's expansion can be written as

The concept of a determinant actually appeared nearly two millennium before its supposed invention by the Japanese mathematician Seki Kowa (1642--1708) in 1683, or his German contemporary Gottfried Leibniz (1646--1716). Traditionally, the determinant of a square matrix is denoted by det(A), det A, or |A|.

In the case of a 2 × 2 matrix (2 rows and 2 columns) A, the determinant is

It is easy to remember when you think of a cross:

|

|

Similarly, for a 3 × 3 matrix (3 rows and 3 columns), we have a specific formula based on the Laplace expansion:

It may look complicated, but there is a pattern:

To work out the determinant of a 3×3 matrix:

- Multiply a by the determinant of the 2×2 matrix that is not in a's row or column.

- Likewise for b, and for c

- Sum them up, but remember the minus in front of the b

In Mathematica, the command Det[M] gives the

determinant of the square matrix M:

Inverse Matrix

Let \( {\bf A} = \left[ a_{i,j} \right] \) be n×n matrix with cofactors \( C_{ij} = (-1)^{i+j} {\bf M}_{i,j} , \ i,j = 1,2, \ldots , n . \) The matrix formed by all of the cofactors is called the cofactor matrix (also called the matrix of cofactors or, sometimes, comatrix):

We list the main properties of determinants:

1. \( \det ({\bf I} ) = 1 ,\) where I is the identity matrix (all entries are zeroes except diagonal terms, which all are ones).

2. \( \det \left( {\bf A}^{\mathrm T} \right) = \det \left( {\bf A} \right) . \)

3. \( \det \left( {\bf A}^{-1} \right) = 1/\det \left( {\bf A} \right) = \left( \det {\bf A} \right)^{-1} . \)

4. \( \det \left( {\bf A}\, \ast\, {\bf B} \right) = \det {\bf A} \, \det {\bf B} . \)

5. \( \det \left( c\,{\bf A} \right) = c^n \,\det \left( {\bf A} \right) \) for \( n\times n \) matrix

A and a scalar c.

6. If \( {\bf A} = [a_{i,j}] \) is a triangular matrix, i.e. \( a_{i,j} = 0 \) whenever i > j or, alternatively, whenever i < j, then its determinant equals the product of the diagonal entries:

We list some basic properties of the inverse operation:

1. \( \left( {\bf A}^{-1} \right)^{-1} = {\bf A} . \)

2. \( \left( c\,{\bf A} \right)^{-1} = c^{-1} \,{\bf A}^{-1} \) for nonzero scalar c.

3. \( \left( {\bf A}^{\mathrm T} \right)^{-1} = \left( {\bf A}^{-1} \right)^{\mathrm T} . \)

4. \( \left( {\bf A}\, {\bf B} \right)^{-1} = {\bf B}^{-1} {\bf A}^{-1} . \)

Simplify[Inverse[A]]

For any unit vector v, we define the reflection matrix:

Inverse[R]

- Bibliography for the Inverse Matrix

- Axler, S., Linear Algebra Done Right, Springer; NY, 2014.

- Hannah, John, A geometrical approach to determinants, The American mathematical Monthly, 1996, Vol. 103, No. 5, pp. 401--409.

- Yandl, A.L. and Swenson, C., A class of matrices with zero determinant, Mathematics Magazine, 2012, Vol. 85, Issue 2, pp. 126--130. https://doi.org/10.4169/math.mag.85.2.126

Return to Mathematica page

Return to the main page (APMA0340)

Return to the Part 1 Matrix Algebra

Return to the Part 2 Linear Systems of Ordinary Differential Equations

Return to the Part 3 Non-linear Systems of Ordinary Differential Equations

Return to the Part 4 Numerical Methods

Return to the Part 5 Fourier Series

Return to the Part 6 Partial Differential Equations

Return to the Part 7 Special Functions