This is a

tutorial made solely for the purpose of education and it was designed

for students taking Applied Mathematics 0340. It is primarily for students who

have some experience using Mathematica. If you have never used

Mathematica before and would like to learn more of the basics for this

computer algebra system, it is strongly recommended looking at the APMA

0330 tutorial. As a friendly reminder, don'r forget to clear variables in use and/or the kernel.

Finally, the commands in this tutorial are all written in bold black font,

while Mathematica output is in regular fonts. This means that you can

copy and paste all commands into Mathematica, change the parameters and

run them. You, as the user, are free to use the scripts

to your needs for learning how to use the Mathematica program, and have

the

right to distribute this tutorial and refer to this tutorial as long as

this tutorial is accredited appropriately. The tutorial accompanies the

textbook Applied Differential Equations.

The Primary Course by Vladimir Dobrushkin, CRC Press, 2015; http://www.crcpress.com/product/isbn/9781439851043

Return to computing page for the first course APMA0330

Return to computing page for the second course APMA0340

Return to Mathematica tutorial for the first course APMA0330

Return to Mathematica tutorial for the second course APMA0340

Return to the main page for the course APMA0340

Return to the main page for the course APMA0330

SVD factorization

One of the most fruitful ideas in the theory of matrices is that of matrix decomposition or canonical form. The theoretical

utility of matrix decompositions has long been appreciated. Previously, we have discused LU-decomposition

and QR-factorization for rectangular m-by-n matrices. For a square

\( n \times n \) matrix, we know even more canonical forms:

- An eigenvalue decomposition (abbreviated EVD) of a symmetrical (self-adjoint) matrix

\[

{\bf A} = {\bf P}\,{\bf \Lambda}\,{\bf P}^{\mathrm T} \qquad \mbox{or} \qquad {\bf A} = {\bf P}\,{\bf \Lambda}\,{\bf P}^{\ast} .

\]

where P is an n-by-n orthogonal (unitary) matrix of eigenvalues of A,

and Λ is the diagonal matrix whose diagonal entries are eigenvalues corresponding to the column vectors of S.

- A Hessenberg decomposition of a square matrix with real entries, due to the German electrical engineer Karl Hessenberg (1904--1959),

\[

{\bf A} = {\bf P}\,{\bf H}\,{\bf P}^{\mathrm T} ,

\]

in which P is an orthogonal matrix and H is in upper Hessenberg form:

\( {\bf H} = \begin{bmatrix} x&x & \cdots &x&x&x \\ x&x& \cdots &x&x&x \\ 0&x& \cdots&x&x&x \\ \vdots&\vdots & \ddots &\vdots& \vdots& \vdots \\

0&0& \cdots &x&x&x \\ 0&0& \cdots &0&x&x \end{bmatrix} . \)

- Schur's decomposition for an n-by-n matrix A with real entries and

real eigenvalues: \( {\bf A} = {\bf P}\,{\bf S}\,{\bf P}^{\mathrm T} , \) where P is

an orthogonal matrix such that \( {\bf P}^{\mathrm T} {\bf A} \, {\bf P} = \begin{bmatrix}

\lambda_1 & x & x& \cdots & x \\ 0&\lambda_2 & x & \cdots & x \\ 0&0&\lambda_3& \cdots & x \\ \vdots&\vdots& \vdots & \ddots & \vdots \\

0&0&0& \cdots & \lambda_n \end{bmatrix} , \) in which \( \lambda_1 , \lambda_2 , \ldots , \lambda_n \)

are the eigenvalues of A repeated according to multiplicity.

- Cholesky decomposition of a positive definite matrix: \( {\bf A} = {\bf L}\,{\bf L}^{\ast} , \)

where L is a lower triangular matrix.

Of many useful decompositions, the singular value decomposition---that is,

the factorization of a m-by-n matrix A into the product \( {\bf U}\, {\bf \Sigma} \,{\bf V}^{\ast} \) of a unitary (or orthogonal) \( m \times m \) matrix U, a \( m \times n \) 'diagonal' matrix \( {\bf \Sigma} \) and another unitary \( n \times n \) matrix \( {\bf V}^{\ast} \) ---has assumed a special role. There are several reasons. In the first place,

the fact that the decomposition is achieved by unitary matrices makes it an ideal vehicle for discussing the geometry

of n-space. Second, it is stable; small pertubations in A corresponds to small pertubabtions in \( {\bf \Sigma} .\) Third, the diagonality of \( {\bf \Sigma} \) makes it easy to operate and solve equations.

It is an intriguing observation that most of the classical matrix decompositions predated the widespread use of

matrices. In 1873, the Italian mathematician Eugenio Beltrami (1835--1899) published a first paper on SVD, which was

followed by the work of Camille Jordan in 1874, whom we can consider as a codiscover. Later James Joseph Sylvester (1814--1897),

Edhard Schmidt (1876--1959), and Hermann Weyl (1885--1955) were responsible for establishing the existence of the

singular value decomposition (SVD) and developing its theory. The term ``singular value'' seems to have come from the literature on integral equations.

The British-born American mathematician Harry Bateman (1882--1946) used it in a research paper published in 1908.

Let A be an arbitrary real- or complex-valued \( m \times n \) matrix. Then

- \( {\bf A}^{\ast} {\bf A} \) and \( {\bf A} \, {\bf A}^{\ast} \) are

both symmetric positive semidefinite matrices of dimensions \( n \times n \) and \( m \times m ,\) respectively.

- The equality \( {\bf A} = {\bf U}\,{\bf \Sigma}\, {\bf V}^{\ast} = {\bf U}\,{\bf \Sigma}\, {\bf V}^{-1} \) is called the singular value decomposition (or SVD) of

A, where V is unitary (or orthogonal in real-valued case) \( n \times n \) matrix, U

is unitary/orthogonal \( m \times m \) matrix, and \( {\bf \Sigma} = {\bf U}^{\ast} {\bf A}\, {\bf V} \)

is diagonal \( m \times n \) matrix, with \( {\bf \Sigma} = \mbox{diag}( \sigma_1 , \sigma_2 , \ldots , \sigma_p ), \) where

\( p = \min (m,n) \) and the nonnegative numbers \( \sigma_1 \ge \sigma_2 \ge \cdots \ge\sigma_p \ge 0 \) are

singular values of A.

- If r is rank of A, then A has exactly r positive singular values

\( \sigma_1 \ge \sigma_2 \ge \cdots \ge\sigma_r > 0 \) with \( \sigma_{r+1} = \sigma_{r+2} = \cdots = \sigma_p =0 . \)

- Any real \( m \times n \) matrix A can be expressed as a finite sum of

rank 1 matrices in normalized form, that is, \( {\bf A} = \sigma_1 {\bf R}_1 + \sigma_2 {\bf R}_2

+ \cdots + \sigma_r {\bf R}_r , \) where \( {\bf R}_i = {\bf u}_i {\bf v}_i^{\ast} = {\bf u}_i \otimes {\bf v}_i , \)

\( {\bf u}_i \) is the i-th column of U and a unit vector of \( {\bf A}\,{\bf A}^{\ast} , \) and

\( {\bf v}_i \) is the i-th column of V and a unit eigenvector of

\( {\bf A}^{\ast} {\bf A} . \)

- The pseudoinverse \( {\bf A}^{\dagger} \) of a matrix with SVD \( {\bf A} = {\bf U}\,{\bf \Sigma}\, {\bf V}^{\ast} \) is the matrix

\( {\bf A}^{\dagger} = {\bf V} {\bf \Sigma}^{\dagger} {\bf U}^{\ast} \) with

\[

{\bf \Sigma}^{\dagger} = \mbox{diag} \left( \frac{1}{\sigma_1} , \cdots , \frac{1}{\sigma_r} , 0, \cdots , 0\right) \in \mathbb{R}^{m\times n} .

\]

Theorem: If A is an \( m \times n \) matrix with real entries, then

- A and ATA have the same null space (kernel).

- A and ATA have the same row space.

- AT and ATA have the same column space.

- A and ATA have the same rank.

Theorem: If A is an \( m \times n \) real matrix, then

- ATA is orthogonally diagonalizable: \( {\bf V}^{\mathrm T} \left(

{\bf A}^{\mathrm T} {\bf A} \right) {\bf V} = \mbox{diag} \left( \sigma_1^2 , \ldots , \sigma_n^2 \right) . \)

- The eigenvalues \( \{ \sigma_1^2 , \ldots , \sigma_n^2 \} \) of ATA are nonnegative.

- The column vectors of U are the eigenvectors of the symmetric matrix AAT. ■

If A is a square matrix, then there is no relation between eigenvalues of A and

ATA as the following example shows.

Example. Consider the matrix

\[

{\bf A} = \begin{bmatrix} 4&2&5 \\ 0&16&0 \\ 0&14&9 \end{bmatrix}

\]

that we met previously. This matrix A has three distinct positive (integer) eigenvalues \(

\lambda = 16, 9, 4 . \) Two symmetric matrices

\[

{\bf B}_1 = {\bf A}^T {\bf A} = \begin{bmatrix} 16&8&20 \\ 8&456&136 \\ 20&136&106 \end{bmatrix} \quad \mbox{and} \quad

{\bf B}_2 = {\bf A} \, {\bf A}^T = \begin{bmatrix} 45&32&73 \\ 32&256&224 \\ 73&224&277 \end{bmatrix}

\]

share the same positive irrational eigenvalues (but have different eigenvectors):

\[

\lambda_1 \approx 503.039 , \qquad \lambda_2 \approx 64.7801, \qquad \lambda_3 \approx 10.1813 .

\]

We can use a standard Mathematica command to obtain SVD for the given matrix

ParametricPlot[{4*Sin[u]*Cos[v] + 11*Sin[u]*Sin[v] + 14*Cos[u],

8*Sin[u]*Cos[v] + 7*Sin[u]*Sin[v] - 2*Cos[u]}, {u, 0,

1*Pi}, {v, -Pi, 2*Pi}]

Out[15]=

Definition: If A is an \( m \times n \) matrix,

and if \( \lambda_1 , \lambda_2 , \ldots , \lambda_n \) are the eigenvalues of

ATA, then the numbers

\[

\sigma_1 = \sqrt{\lambda_1} , \quad \sigma_2 = \sqrt{\lambda_2} , \quad \ldots \quad , \quad \sigma_n = \sqrt{\lambda_n}

\]

are called the singular values of A. In other words, a nonnegative real

number σ is a singular value for m-by-n matrix A if and only if there exist

unit-length vectors \( {\bf u} \in \mathbb{R}^m \quad\mbox{and} \quad {\bf v} \in \mathbb{R}^n \)

such that \( {\bf A}{\bf v} = \sigma {\bf u} . \) The vectors u and

v are called left singular and right singular vectors for σ, respectively. ■

Theorem: Let A be a m-by-n matrix with

complex or real entries. Then its largest singular value equals to the Euclidean 2-norm: \( \max

\{ \sigma_i \} = \| {\bf A} \|_2 = \max_{\| {\bf x} \| =1} \| {\bf A} {\bf x} \| , \)

and square root of the sum of squares of the singular values equals the

Frobenius norm: \( \sum_i \sigma_i^2 = \| {\bf A} \|_F^2 =

\sum_{i,j} |a_{i,j} |^2 = \mbox{trace} \left( {\bf A}^{\ast} {\bf A} \right) . \) ■

There are known two forms of the singular value decompositions---the brief (or reduced) version and the expanded form:

Theorem: (Singular Value Decomposition in expanded form)

If A is an \( m \times n \) matrix of rank r, then

A can be factored as

\[

{\bf A} = \left[ {\bf u}_1 \ {\bf u}_2 \ \cdots \ {\bf u}_r \vert {\bf u}_{r+1} \ \cdots \ {\bf u}_m \right]

\left[ \begin{array}{cccc|c} \sigma_1 & 0 & \cdots & 0 & \\ 0& \sigma_2 & \cdots & 0 & \\

\vdots & \vdots & \ddots & \vdots & {\bf 0}_{r\times (n-r)} \\ 0&0& \cdots & \sigma_r & \\ \hline \\

&& {\bf 0}_{(m-r)\times r} & &{\bf 0}_{(m-r)\times (n-r)} \end{array} \right] \begin{bmatrix} {\bf v}_1^{\mathrm T} \\ \vdots \\ {\bf v}_r^{\mathrm T} \\ \hline {\bf v}_{r+1}^{\mathrm T}

\\ \vdots \\ {\bf v}_n^{\mathrm T} \end{bmatrix}

\]

in which U, Σ, and V have sizes \( m \times m , \)

\( m \times n , \) and \( n \times n ,\) respectively, and in which:

- \( {\bf V} = \left[ {\bf v}_1 \ {\bf v}_2 \ \cdots \ {\bf v}_n \right] \) orthogonally diagonalizes

\( {\bf A}^{\mathrm T} {\bf A} = {\bf V} \mbox{diag} (\sigma_i^2 ) \,{\bf V}^{\mathrm T} .\) Column vectors in V are usually called right singular vectors of A.

- The nonzero diagonal entries of Σ are \( \sigma_1 = \sqrt{\lambda_1} ,

\ \sigma_2 = \sqrt{\lambda_2} , \ldots , \sigma_r = \sqrt{\lambda_r} , \) where \( \lambda_1 ,

\lambda_2 , \ldots , \lambda_r \) are the nonzero eigenvalues of ATA

corresponding to the column vectors of V.

- The column vectors of V are ordered so that \( \sigma_1 \ge \sigma_2 \ge \cdots \ge \sigma_r >0. \)

- \( {\bf u}_i = \frac{{\bf A} {\bf v}_i}{\| {\bf A} {\bf v}_i \|} = \frac{1}{\sigma_i} \, {\bf A}\,{\bf v}_i

\quad (i=1,2,\ldots , r) . \)

- \( {\bf U} = \left[ {\bf u}_1 , {\bf u}_2 , \ldots , {\bf u}_r \right] \)

is an orthogonal basis for the column space of A. Column vectors in U are usually called left singular vectors of A.

- \( \left[ {\bf u}_1 , {\bf u}_2 , \ldots , {\bf u}_r , {\bf u}_{r+1} , \ldots , {\bf u}_m \right] \)

is an extension of \( \left[ {\bf u}_1 , {\bf u}_2 , \ldots , {\bf u}_r \right] \) to

an orthogonal basis for \( {\mathbb R}^m . \) ■

When rank r of \( m \times n \) matrix A is less than

\( \min \{ m, n \} , \) the most entries of 'diagonal'

\( m \times n \) matrix Σ are zeroes except singular values

\( \sigma_1 , \ldots , \sigma_r . \) Therefore, when this matrix is multiplied from

left by U and from right by VT, their entries \(

{\bf u}_{r+1} , \ldots , {\bf u}_m \) and \( {\bf v}_{r+1} , \ldots , {\bf v}_n \)

do not contribute to the final matrix. So we can drop them and represent A in the reduced form, as the

following theorem states.

Let r be the rank of m-by-n matrix A. Then \( \sigma_{r+1} =

\sigma_{r+2} = \cdots = \sigma_n . \) Partition \( {\bf U} = \left[ {\bf U}_1 \ {\bf U}_2 \right] \)

and \( {\bf V} = \left[ {\bf V}_1 \ {\bf V}_2 \right] , \) where

\( {\bf U}_1 = \left[ {\bf u}_1 \ , \ldots , \ {\bf u}_r \right] \) and

\( {\bf V}_1 = \left[ {\bf v}_1 \ , \ldots , \ {\bf v}_r \right] \) have r columns.

Then with \( {\bf \Sigma}_r = \mbox{diag}(\sigma_1 , \ldots , \sigma_r ) : \)

\begin{align*}

{\bf A} &= \left[ {\bf U}_1 \ {\bf U}_2 \right] \left( \begin{array}{cc} {\bf \Sigma}_r & {\bf 0}_{r\times (n-r)} \\

{\bf 0}_{(m-r)\times r} & {\bf 0}_{(m-r) \times (n-r)} \end{array} \right) \left[ {\bf V}_1 \ {\bf V}_2 \right]^{\mathrm T} \qquad\mbox{full SVD}

\\

&= {\bf U}_1 {\bf \Sigma}_r {\bf V}_1^{\mathrm T} = \sum_{i=1}^r \sigma_i {\bf u}_i \otimes {\bf v}_i \qquad \mbox{brief SVD} .

\end{align*}

Theorem: (Singular Value Decomposition in brief/reduced form)

If A is an \( m \times n \) matrix

of rank r, then A can be expressed in the form

\( {\bf A}_{m\times n} = {\bf U}_{m\times r} {\bf \Sigma}_r {\bf V}^{\mathrm T}_{r\times n} , \)

where Σr has size r-by-r and

\[

{\bf \Sigma}_r = \left[ \begin{array}{cccc} \sigma_1 & 0 & \cdots & 0 \\ 0 & \sigma_2 & \cdots & 0 \\

\vdots & \vdots & \ddots & \vdots \\ 0&0& \cdots & \sigma_r \end{array} \right] , \quad {\bf U}_{m\times r} = \left[

{\bf u}_1 \ \cdots \ {\bf u}_r \right] , \quad {\bf V}_{n\times r} = \left[ {\bf v}_1 \ \cdots \ {\bf v}_r \right] .

\]

It is a custom to place the successive diagonal entries in r-by-r matrix Σr

in nonincreasing order, U is an m-by-r orthogonal matrix, and \( {\bf V}_{n\times r} \)

is an n-by-r

orthogonal matrix. ■

The matrices U and V are not uniquely determined by A,

but the diagonal entries of Σ are necessarily the singular values of A.

The set \( \{ {\bf u}_1 , \ldots , {\bf u}_r \} \) is an orthonormal basis for the column

space of A. The set \( \{ {\bf u}_{r+1} , \ldots , {\bf u}_m \} \) is an

orthonormal basis for the kernel (null space) of AT. The set

\( \{ {\bf v}_1 , \ldots , {\bf v}_r \} \) is an orthonormal basis for the row space of A.

The set \( \{ {\bf v}_{r+1} , \ldots , {\bf v}_n \} \) is an orthonormal basis for the

kernel of A.

One way to understand how a matrix A deforms space is to consider its action on the unit sphere in \( {\mathbb R}^n . \)

An arbitrary element x of this unit sphere can be represented by \( {\bf x} = x_1 v_1 + x_2 v_2 + \cdots + x_n v_n \)

with \( \sum_{i=1}^n x_i^2 =1 . \) The image is \( {\bf A}{\bf x} = \sigma_1 x_1 u_1 + \cdots + \sigma_m x_p u_p . \)

Letting \( y_i = \sigma_i x_i , \) we see that the image of the unit sphere consists of the vectors

\( y_1 u_1 + y_2 u_2 + \cdots + y_p u_p , \) where

\[

\frac{y_1^2}{\sigma_1^2} + \frac{y_2^2}{\sigma_2^2} + \cdots + \frac{y_p^2}{\sigma_p^2} = \sum_1^p x_i^2 \le 1.

\]

If A has full column rank (linearly independent columns), so \( p=n , \) the indequality is actually a strict equality.

Otherwise, some of the xi are missing on the right, and the sum can be any number from 0 to 1. This

shows that A maps the unit sphere of \( {\mathbb R}^n \) to a p-dimensional

ellipsoid with semi-axes in the directions ui and with magnitudes \( \sigma_i . \)

If \( p=n , \) the image is just the surface of the ellipsoid; otherwise it is the solid ellipsoid.

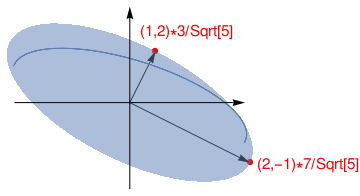

Example. If \( {\bf A} = \left[ \begin{array}{ccc} 1\ & 2& \ 6 \\ 2\ & -3 \ & -2 \end{array} \right] , \)

then the linear transformation \( {\bf x} \,\mapsto \,{\bf A}{\bf x} \) maps the unit sphere

\( \left\{ {\bf x} : \, \| {\bf x} \| =1 \right\} \) in \( {\bf \mathbb R}^3 \)

onto an ellipse in \( {\bf \mathbb R}^2 , \) shown in Figure:

point1 = Graphics[{Red, {PointSize[0.02], Point[{2, -1}*7/Sqrt[5]]}}]

point2 = Graphics[{Red, {PointSize[0.02], Point[{1, 2}*3/Sqrt[5]]}}]

ellipse =

ParametricPlot[{1*Sin[u]*Cos[v] + 2*Sin[u]*Sin[v] + 6*Cos[u],

2*Sin[u]*Cos[v] - 3*Sin[u]*Sin[v] - 2*Cos[u]}, {u, 0, 1*Pi}, {v, 0,

2*Pi}]

arrow1 = Graphics[{{Thick, Black},

Arrow[{{0, 0}, {2, -1}*7/Sqrt[5]}]}]

arrow2 = Graphics[{{Thick, Black}, Arrow[{{0, 0}, {1, 2}*3/Sqrt[5]}]}]

axes1 = Graphics[{{Dashed, Black}, Arrow[{{-6, 0}, {6, 0}}]}]

axes2 = Graphics[{{Dashed, Black}, Arrow[{{0, -4.5}, {0, 5}}]}]

text1 = Graphics[

Text[Style["(2,-1)*7/Sqrt[5]", FontSize -> 14, Red], {9.3, -3.2}]]

text2 = Graphics[

Text[Style["(1,2)*3/Sqrt[5]", FontSize -> 14, Red], {3.0, 3.7}]]

Show[point1, point2, arrow1, arrow2, ellipse, axes1, axes2, text1,

text2]

Out[15]=

The quantity \( \| {\bf A}{\bf x} \|^2 \) is maximized at the same x that maximizes

\( \| {\bf A}{\bf x} \| , \) and \( \| {\bf A}{\bf x} \|^2 \) is

easier to study:

\[

\| {\bf A}{\bf x} \|^2 = \left( {\bf A}{\bf x} \right)^{\mathrm T} \left( {\bf A}{\bf x} \right) = {\bf x}^{\mathrm T}

{\bf A}^{\mathrm T} {\bf A} {\bf x} = {\bf x}^{\mathrm T} \left( {\bf A}^{\mathrm T} {\bf A} \right) {\bf x} .

\]

Also, both \( {\bf A}^{\mathrm T} {\bf A} \) and \( {\bf A} {\bf A}^{\mathrm T} \)

are symmetric matrices:

\[

{\bf B}_1 = {\bf A}^{\mathrm T} {\bf A} = \begin{bmatrix} 5&-4&2 \\ -4&13&18 \\ 2&18&40 \end{bmatrix} \quad \mbox{and} \quad

{\bf B}_2 = {\bf A} {\bf A}^{\mathrm T} = \begin{bmatrix} 41&-16 \\ -16 &17 \end{bmatrix}

\]

that have three eigenvalues \( \lambda_1 =49, \quad \lambda_2 =9, \quad \lambda_3 =0 \)

for B1 and two eigenvalues \( \lambda_1 =49, \quad \lambda_2 =9 \)

for B2.

Corresponding unit eigenvectors for B1 (right singular vectors for A) are, respectively,

\[

{\bf v}_1 = \frac{1}{\sqrt{5}} \,\begin{bmatrix} 0 \\ 1 \\ 2 \end{bmatrix} , \quad {\bf v}_2 = \frac{1}{3\sqrt{5}} \,

\begin{bmatrix} 5 \\ -4 \\ 2 \end{bmatrix} , \quad {\bf v}_3 = \frac{1}{3} \, \begin{bmatrix} 2 \\ 2 \\ -1 \end{bmatrix} .

\]

Evaluating products, we get

\[

{\bf A} {\bf v}_1 = \frac{7}{\sqrt{5}} \,\begin{bmatrix} 2 \\ -1 \end{bmatrix} , \quad {\bf A} {\bf v}_2 = \frac{3}{\sqrt{5}} \,

\begin{bmatrix} 1 \\ 2 \end{bmatrix} , \quad {\bf A}{\bf v}_3 = {\bf 0} .

\]

Therefore, the maximum value of \( \| {\bf A}{\bf x} \|^2 \) is 49, attained when x is

the unit vector \( {\bf v}_1 . \) The vector \( {\bf A}{\bf v}_1 \) is a point

on the ellipse in Figure farthest from the origin \( \| {\bf A}{\bf v}_1 \| = 7 . \)

The singular values of A are the square roots of the eigenvalues of \( {\bf B}_1 = {\bf A}^{\mathrm T} {\bf A} : \)

\[

\sigma_1 = \sqrt{49} = 7, \quad \sigma_2 = \sqrt{9} =3, \quad \sigma_3 =0.

\]

The first singular value of A is the maximum of \( \| {\bf A}{\bf x} \| \) over all

unit vectors, and the maximum is attained at the unit vector \( {\bf v}_1 . \) This singular value 7 is

the 2-norm of matrix A:

\[

\sigma_1 = 7 = \| {\bf A} \|_2 < \left( \sum_{i=1}^2 \sum_{j=1}^3 \left\vert a_{i,j} \right\vert^2 \right)^{1/2} = \sqrt{58} = \| {\bf A} \|_F \approx 7.61577 ,

\]

which is the Frobenius norm. Normalizing \( {\bf A}{\bf v}_{1,2} , \) we obtain left singualr vectors of A:

\[

{\bf u}_1 = \frac{1}{\sigma_1} \,{\bf A} {\bf v}_1 = \frac{1}{\sqrt{5}} \,\begin{bmatrix} 2 \\ -1 \end{bmatrix} , \qquad

{\bf u}_2 = \frac{1}{\sigma_2} \,{\bf A} {\bf v}_2 = \frac{1}{\sqrt{5}} \,\begin{bmatrix} 1 \\ 2 \end{bmatrix} .

\]

However, Mathematica can provide you normalized vectors:

Eigenvectors[N[your matrix]]

Now we construct the orthogonal matrices

\[

{\bf U} = \left[ {\bf u}_1 \ {\bf u}_2 \right] = \frac{1}{\sqrt{5}}\, \begin{bmatrix} 2&1 \\ -1&2 \end{bmatrix} \quad\mbox{and} \quad

{\bf V} = \left[ {\bf v}_1 \ {\bf v}_2 \ {\bf v}_3 \right] = \frac{1}{3\sqrt{5}}\, \begin{bmatrix} 0&5&2\sqrt{5} \\ 3&-4& 2\sqrt{5} \\

6&2&-\sqrt{5} \end{bmatrix} .

\]

This allows us to obtain the expanded form of SVD for matrix A

\[

{\bf A} = {\bf U} {\bf \Sigma} {\bf V}^{\mathrm T} = \frac{1}{15} \begin{bmatrix} 2&1 \\ -1&2 \end{bmatrix} \,\begin{bmatrix} 7&0&0 \\ 0&3&0 \end{bmatrix}

\, \begin{bmatrix} 0&3&6 \\ 5&-4&2 \\ 2\sqrt{5} & 2\sqrt{5} & -\sqrt{5} \end{bmatrix}

\]

and the reduced form

\[

{\bf A} = {\bf U} {\bf \Sigma}_{2\times 2} {\bf V}^{\mathrm T}_{2\times 3} = \frac{1}{15} \begin{bmatrix} 2&1 \\ -1&2 \end{bmatrix} \,\begin{bmatrix} 7&0 \\ 0&3 \end{bmatrix}

\, \begin{bmatrix} 0&3&6 \\ 5&-4&2 \end{bmatrix} ,

\]

where

\[

{\bf \Sigma} = \begin{bmatrix} 7&0&0 \\ 0&3&0 \end{bmatrix} , \qquad {\bf \Sigma}_{2\times 2} = \begin{bmatrix} 7&0 \\ 0&3 \end{bmatrix} , \quad {\bf V}^{\mathrm T}_{2\times 3} = \begin{bmatrix} 0&3&6 \\ 5&-4&2 \end{bmatrix} .

\]

Finally, we represent matrix A as a sum of rank 1 matrices:

\[

{\bf A} = \sigma_1 {\bf R}_1 + \sigma_2 {\bf R}_2 = 7 {\bf R}_1 + 3 {\bf R}_2 ,

\]

where

\[

{\bf R}_1 = {\bf u}_1 {\bf v}_1^{\mathrm T} = \frac{1}{5} \,\begin{bmatrix} 0&2&4 \\ 0&-1&-2 \end{bmatrix} , \quad

{\bf R}_2 = {\bf u}_2 {\bf v}_2^{\mathrm T} = \frac{1}{15} \,\begin{bmatrix} 5&-4&2 \\ 10&-8&4 \end{bmatrix} .

\]

Example. Consider the full rank matrix

\[

{\bf A} = \begin{bmatrix} 1&2 \\ 0&2 \\ 2&0 \end{bmatrix} .

\]

Two symmetric matrices

\[

{\bf B}_1 = {\bf A}^T {\bf A} = \begin{bmatrix} 5&2 \\ 2&8 \end{bmatrix} \quad \mbox{and} \quad

{\bf B}_2 = {\bf A} \, {\bf A}^T = \begin{bmatrix} 5&4&2 \\ 4&4&0 \\ 2&0&4 \end{bmatrix} ,

\]

the latter is a singular one, share the same eigenvalues \( \lambda_{1} = 9, \quad \lambda_2 = 4, \)

having corresponding eigenvectors:

\[

{\bf v}_1 = \begin{bmatrix} 1 \\ 2 \end{bmatrix} , \quad {\bf v}_2 = \begin{bmatrix} 2 \\ -1 \end{bmatrix} ,

\]

and, including \( \lambda_{3} = 0 , \)

\[

{\bf u}_1 = \begin{bmatrix} 5 \\ 4 \\ 2 \end{bmatrix} , \quad {\bf u}_2 = \begin{bmatrix} 0 \\ -1 \\ 2 \end{bmatrix} ,

\quad {\bf u}_3 = \begin{bmatrix} -2 \\ 2 \\ 1 \end{bmatrix} .

\]

Since these two matrices are symmetric, the corresponding eigenvectors are orthogonal. We can normalize these vectors

by dividing by \( \| {\bf v}_1 \|_2 = \| {\bf v}_2 \|_2 = \sqrt{5} \approx 2.23607 \)

and \( \| {\bf u}_1 \|_2 = 3\sqrt{5} \approx 6.7082, \)

\( \| {\bf u}_2 \|_2 = \sqrt{5} \approx 2.23607 , \)

\( \| {\bf u}_3 \|_2 = 3 . \) This yields right singular vectors

\[

{\bf v}_1 = \frac{1}{\sqrt{5}} \,\begin{bmatrix} 1 \\ 2 \end{bmatrix} , \quad {\bf v}_2 = \frac{1}{\sqrt{5}} \,\begin{bmatrix} -2 \\ 1 \end{bmatrix} ,

\]

and left singular vectors

\[

{\bf u}_1 = \frac{1}{3\sqrt{5}} \,\begin{bmatrix} 5 \\ 4 \\ 2 \end{bmatrix} , \quad

{\bf u}_2 = \frac{1}{\sqrt{5}} \,\begin{bmatrix} 0 \\ -1 \\ 2 \end{bmatrix} , \quad

{\bf u}_3 = \frac{1}{3} \,\begin{bmatrix} -2 \\ 2 \\ 1 \end{bmatrix} ,

\]

Taking square roots of positive eigenvalues corresponding to B1 and

B2, we obtain singular values for matrix A:

\[

\sigma_1 = \sqrt{9} =3 , \quad \sigma_2 = \sqrt{4} =2 , \quad\mbox{and} \quad \sigma_3 =0.

\]

Then we build two orthogonal matrices:

\[

{\bf V} = \left[ {\bf v}_1 , \ {\bf v}_2 \right] = \frac{1}{\sqrt{5}} \,\begin{bmatrix} 1 & 2 \\

2 & -1 \end{bmatrix}

\]

and

\[

{\bf U} = \left[ {\bf u}_1 , \ {\bf u}_2 , \ {\bf u}_3 \right] = \frac{1}{3\sqrt{5}} \,\begin{bmatrix} 5 & 0 & -2\sqrt{5} \\

4 & -3 & 2\sqrt{5} \\

2 & 6 & \sqrt{5} \end{bmatrix} ,

\]

and the 'diagonal' matrix (which has the same dimensions as A)

\[

{\bf \Sigma} = \begin{bmatrix} \sigma_1 &0 \\ 0&\sigma_2 \\ 0&0 \end{bmatrix} = \begin{bmatrix} 3 &0 \\ 0&2 \\ 0&0 \end{bmatrix} .

\]

This allows us to determing SVD in extended form

\[

{\bf A} = {\bf U}\, {\bf \Sigma} \,{\bf V}^{\mathrm T} = \frac{1}{15}\,\begin{bmatrix} 5&0& -2\sqrt{5} \\ 4&-3& 2\sqrt{5} \\ 2&6&\sqrt{5} \end{bmatrix}

\begin{bmatrix} 3&0 \\0&2 \\ 0&0 \end{bmatrix}

\begin{bmatrix} 1&2 \\ 2&-1 \end{bmatrix} ,

\]

reduced form

\[

{\bf A} = {\bf U}_{3\times 2} {\bf \Sigma}_{2 \times 2} {\bf V}^{\mathrm T} = \frac{1}{15}\,\begin{bmatrix} 5&0 \\ 4&-3 \\ 2&6 \end{bmatrix} \begin{bmatrix} 3&0 \\0&2 \end{bmatrix}

\begin{bmatrix} 1&2 \\ 2&-1 \end{bmatrix} ,

\]

and represent A as a sum of rank 1 matrices:

\[

{\bf A} = \sigma_1 {\bf R}_1 + \sigma_2 {\bf R}_2 = \frac{1}{5} \, \begin{bmatrix} 5&10 \\ 4&8 \\ 2&4 \end{bmatrix} +

\frac{2}{5} \,\begin{bmatrix} 0&0 \\ -2&1 \\ 4&-2 \end{bmatrix} ,

\]

where

\[

{\bf R}_1 = {\bf u}_1 {\bf v}_1^{\mathrm T} = \frac{1}{15} \, \begin{bmatrix} 5&10 \\ 4&8 \\ 2&4 \end{bmatrix} , \quad

{\bf R}_2 = {\bf u}_2 {\bf v}_2^{\mathrm T} = \frac{1}{5} \, \begin{bmatrix} 0&0 \\ -2&1 \\ 4&-2 \end{bmatrix} .

\]

The pseudoinverse (also, the Moore--Penrose inverse) can be found using reduced SVD form:

\[

{\bf A}^{\dagger} = {\bf V} \,{\bf \Sigma}_{2\times 2}^{-1} {\bf U}_{2\times 3}^{\mathrm T} = \frac{1}{15} \,

\begin{bmatrix} 1&2 \\ 2&-1 \end{bmatrix} \begin{bmatrix} 1/3&0 \\ 0&1/2 \end{bmatrix} \begin{bmatrix} 5&4&2 \\ 0&-3&6 \end{bmatrix}

= \frac{1}{18} \, \begin{bmatrix} 2&2&8 \\ 4&5&2 \end{bmatrix} .

\]

Indeed,

\[

{\bf A}^{\dagger} {\bf A} = \begin{bmatrix} 1&0 \\ 0&1 \end{bmatrix} .

\]

Example.

Consider a 3-by-2 matrix \( {\bf A}^{\mathrm T} = \begin{bmatrix} 4& -1&1 \\ -4 & 1& -1 \end{bmatrix} . \)

First, we compute two symmetric singular matrices:

\[

{\bf B}_1 = {\bf A}^T {\bf A} = \begin{bmatrix} 18 &-18 \\ -18 &18 \end{bmatrix} \quad \mbox{and} \quad

{\bf B}_2 = {\bf A} \, {\bf A}^T = \begin{bmatrix} 32 &-8&8 \\ -8 &2&-2 \\ 8 &-2&2 \end{bmatrix}

. \]

The eigenvalues of B1 are 36 and 0, with corresponding unit vectors (that are right singular vectors of A)

\[

{\bf v}_1 = \frac{1}{\sqrt{2}} \, \begin{bmatrix} -1 \\ 1 \end{bmatrix} \quad \mbox{and} \quad

{\bf v}_2 = \frac{1}{\sqrt{2}} \, \begin{bmatrix} 1 \\ 1 \end{bmatrix} .

\]

These unit vectors form the columns of V:

\[

{\bf V} = \left[ {\bf v}_1 \ {\bf v}_2 \right] = \frac{1}{\sqrt{2}} \, \begin{bmatrix} -1&1 \\ 1&1 \end{bmatrix} .

\]

The singular values are \( \sigma_1 = \sqrt{36} =6 \quad\mbox{and} \quad \sigma_2 =0 . \)

Since there is only one nonzero singular value, the matrix Λ may be written as a single number; that is,

\( {\bf \Lambda} = \Lambda =6. \) The matrix Σ has the same size as A, with

Λ in its upper left corner:

\[

{\bf \Sigma} = \begin{bmatrix} \Lambda &0 \\ 0&0 \\ 0&0 \end{bmatrix} = \begin{bmatrix} 6&0 \\ 0&0 \\ 0&0 \end{bmatrix} .

\]

To determine U, first calculate

\[

{\bf A} {\bf v}_1 = \frac{1}{\sqrt{2}} \, \begin{bmatrix} -8 \\ 2 \\ -2 \end{bmatrix} = \sqrt{2} \, \begin{bmatrix} -4 \\ 1 \\ -1 \end{bmatrix} , \quad

{\bf A} {\bf v}_2 = \begin{bmatrix} 0 \\ 0 \\ 0 \end{bmatrix} .

\]

As a check on the calculations, we verify that \( \| {\bf A} {\bf v}_1 \| = \sigma_1 =6 . \)

The only column found for U so far is

\[

{\bf u}_1 = \frac{1}{\sigma_1} \, {\bf A} {\bf v}_1 = \frac{\sqrt{2}}{6} \, \begin{bmatrix} -4 \\ 1 \\ -1 \end{bmatrix} .

\]

The other columns of U are found by extending the set \( \{ {\bf u}_1 \} \)

to an orthonormal basis for \( \mathbb{R}^3 . \) In this case, we need two orthogonal unit vectors

u2 and u3 that are orthogonal to u1.

Each vector must satisfy \( {\bf u}_1^{\mathrm T} {\bf x} =0, \) which is equivalent to the

equation \( 4x_1 - x_2 + x_3 =0 . \) A basis for the solution set of this equation is

\[

{\bf w}_1 = \begin{bmatrix} 0 \\ 1 \\ 1 \end{bmatrix} , \qquad {\bf w}_2 = \begin{bmatrix} 1 \\ 4 \\ 0 \end{bmatrix} .

\]

Upon application of the Gram--Schmidt process to \( \{ {\bf w}_1 , \ {\bf w}_2 \} , \) we obtain

\[

{\bf u}_2 = \frac{1}{\sqrt{2}} \,\begin{bmatrix} 0 \\ 1 \\ 1 \end{bmatrix} , \qquad {\bf u}_3 = \frac{1}{3} \,

\begin{bmatrix} 1 \\ 2 \\ -2 \end{bmatrix} .

\]

Finally, set \( \{ {\bf U} = \left[ {\bf u}_1 \ {\bf u}_2 \ {\bf u}_3 \right] , \) take

Σ and \( \{ {\bf V}^{\mathrm T} \) from above, and write SVD for A:

\[

{\bf A} = \frac{1}{3\sqrt{2}} \,\begin{bmatrix} -4&0&\sqrt{2} \\ 1&3&2\sqrt{2} \\ -1&3&-2\sqrt{2} \end{bmatrix}

\begin{bmatrix} 6&0 \\ 0&0 \\ 0&0 \end{bmatrix} \frac{1}{\sqrt{2}} \,\begin{bmatrix} -1&1 \\ 1 & 1 \end{bmatrix} .

\]

However, its reduced form is very easy

\[

{\bf A} = {\bf U}_{3\times 1} {\bf \Sigma}_{1 \times 1} {\bf V}^{\mathrm T}_{1\times 2} = \begin{bmatrix} -4 \\ 1 \\ -1 \end{bmatrix} \cdot \left[ -1 \ 1 \right] .

\]

because we can keep the number Λ instead of matrix Σ:

\[

{\bf U}_{3\times 1} = \frac{\sqrt{2}}{6} \, \begin{bmatrix} -4 \\ 1 \\ -1 \end{bmatrix} , \quad

{\bf \Sigma}_{1 \times 1} = \Lambda = 6, \quad

{\bf V}^{\mathrm T}_{1\times 2} = \frac{1}{\sqrt{2}} \left[ -1 \ 1 \right] .

\]

Example.

However,