Preface

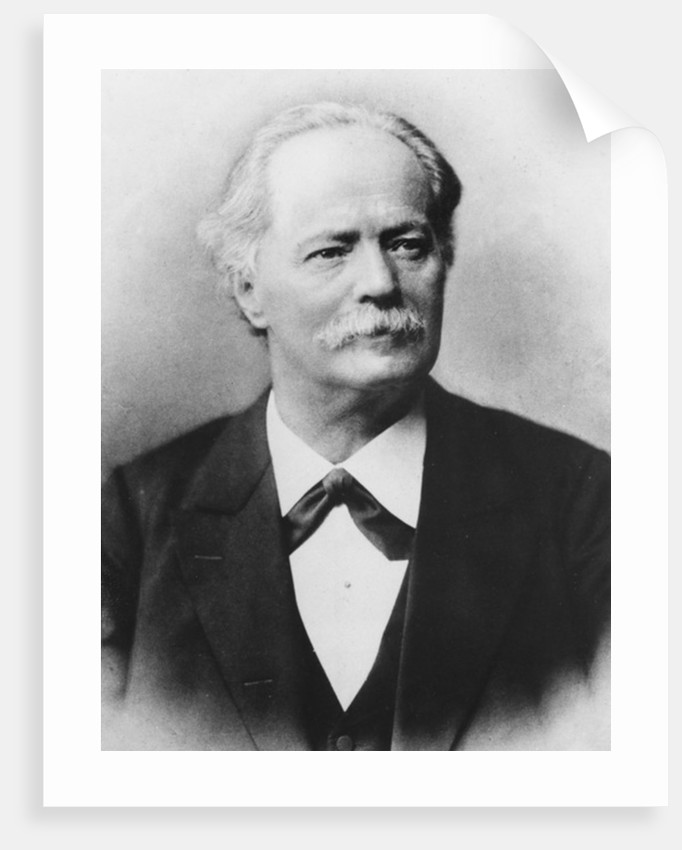

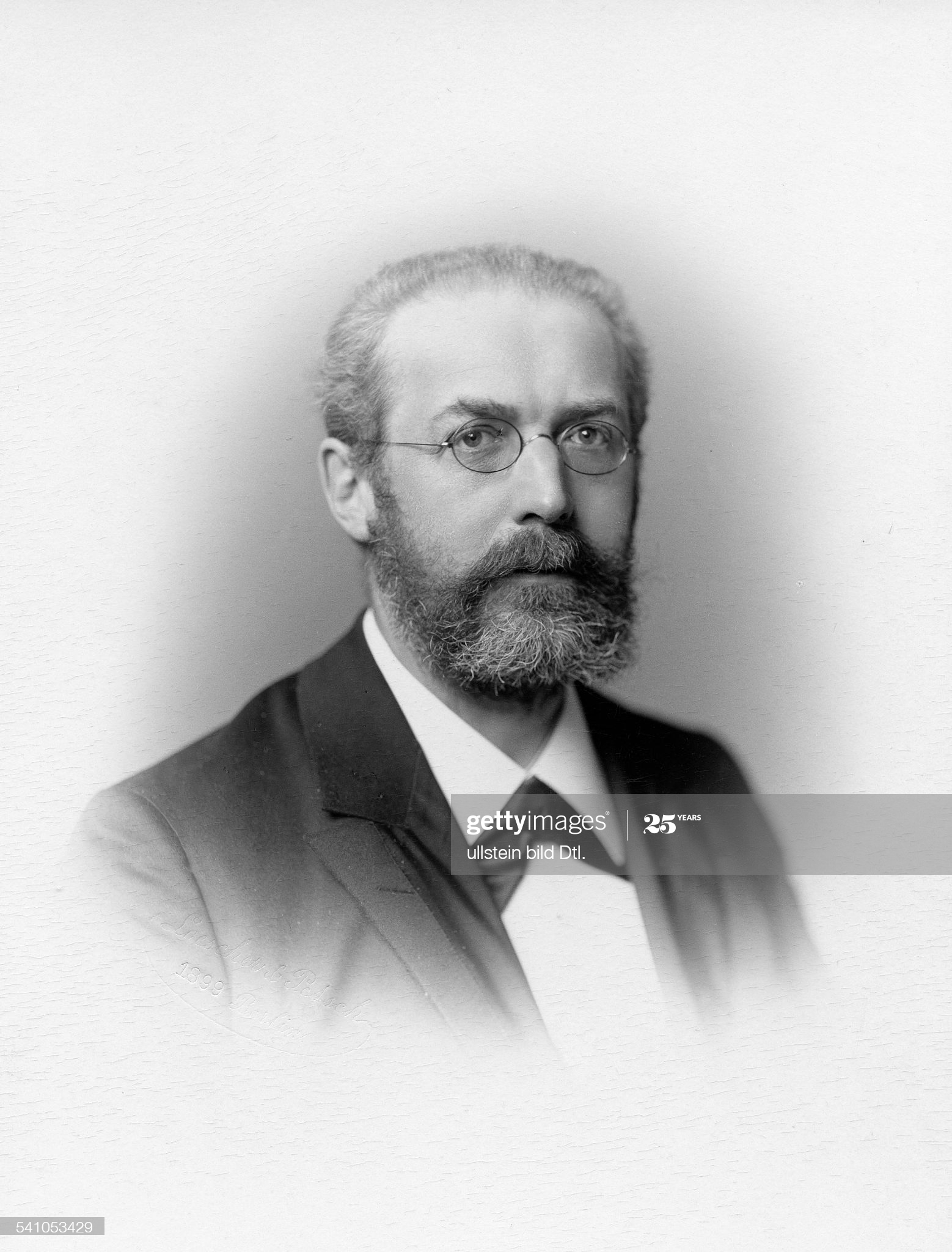

This section (including following subsections) is devoted to analysis of linear differential equations with singular points. Its theory is due mainly to the German mathematicians Carl Gauss (1777--1855), Bernhard Riemann (1826--1866), Lazarus Fuchs (1833--1902), and Georg Frobenius (1849--1917). Gauss and Riemann initiated the investigation by profound study of hypergeometric second order equations (1812, 1857). The general equation of order n with regular singular points was dealt with by Fuchs (1866), and his method was later simplified by Frobenius (1873). It was Fuchs who wrote down an equation that was called later as indicial equation, the roots of which determine the behavior of solutions near regular singular point. Fuchs also pointed out that the singular points of the solutions must lie amongst the singular points of the coefficients, and so, in particular, they are fixed. It was Frobenius who emphasized the convenience of introducing the factors x² and x in the coefficients of the second order equations that are now sometimes are said to be in Frobenius normal form. A history of discovery of power series method and its applications to differential equations with regular singular points is discussed in Gray's article.

Return to computing page for the second course APMA0340

Return to Mathematica tutorial for the first course APMA0330

Return to Mathematica tutorial for the second course APMA0340

Return to the main page for the course APMA0330

Return to the main page for the course APMA0340

Return to Part V of the course APMA0330

Glossary

Singular points

First, we extend the definition of singular points for differential equations of the second order.

For a linear differential equation, the definition above can be simplified because linear differential equations with smooth coefficients do not posses singular solutions, so the corresponding initial value problem always has a unique solution. Therefore, a singular point can be identified by only its abscissa value for linear equations.

By changing an independent variable, we can always consider singular point at the origin (without any loss of generality). Now we need another definition.

Singular points come in two different forms: regular and irregular. Regular singular points are well-behaved and we are going to analyze this case. Irregular singular points are very difficult to analyze and we do not touch this topic. If a differential equation has a singular point x = 0, the initial conditions are not specified at this point because the solutions may not be defined at the point; or if defined, it may be not unique or not developed in power series.

Example: We show a couple of examples of singular differential equations that cause difficulties in defining the solution at the singular point. For instance, consider the first order differential equation with regular singular point

On the other hand, the differential equation with irregular singular point

The differential equation \( \frac{{\text d}y}{{\text d}x} = \frac{x+y}{x} \) has a regular singular point at the origin and becomes infinite when x = 0, y ≠ 0. Its general solution \( y = x\,\ln x + C\,x \) takes the value 0 when x = 0. However, it is impossible to express the solution in the form of a Maclaurin series in powers of x. In this case, we have an infinite number of solutions for the one initial value 0, and no solution for any other initial value of y when x = 0.

The second order differential equation

We do not consider differential equations with irregular singularities for two reasons. First, they usually do not occur in practical applications. Also, for this equations, we do not have existence and uniqueness theorems mostly because the behavior of solutions near singular points is unknown.

Example: We present some examples of differential equations having regular singular points.

-

The differential equation

\( \displaystyle x^2 y'' + \left( \cos x -1 \right) y' + e^x\,y =0 \) has a singular point at the origin. Calculating the limits

\begin{align*} \lim_{x \to 0} x\,p(x) &= \lim_{x \to 0} x\,\frac{\cos x -1}{x^2} = 0 \quad \mbox{according to l'Hopital rule}, \\ \lim_{x \to 0} x^2\,q(x) &= \lim_{x \to 0} x^2\,\frac{e^x}{x^2} = 1 < \infty . \end{align*}Since all these limits are finite, the point x = 0 is a regular singular point.

-

The differential equation \( \left( x-2 \right) y'' +

x^{-1} y' + \left( x+1 \right) y = 0 \)

has two regular singular points at x = 0 and x = 2 because it can be written as

\[ y'' + \frac{1}{x\left( x-2 \right)} \, y' + \frac{x+1}{x-2}\, y =0. \]In order to determine whether the finite singular points are regular or irregular, we find the limits:\begin{align*} \lim_{x \to 0} \left( x \times \frac{1}{x\left( x-2 \right)} \right) &= \lim_{x \to 0} \, \frac{1}{x-2} = -\frac{1}{2} ; \\ \lim_{x \to 0} \left( x^2 \times \frac{x+1}{x-2} \right) &= \lim_{x \to 0} \, \frac{x^2 \left( x+1 \right)}{x-2} = 0. \end{align*}Since both limits are finite, the singular point x = 0 is regular. Next, we consider another limits\begin{align*} \lim_{x \to 2} \left( (x-2) \times \frac{1}{x\left( x-2 \right)} \right) &= \lim_{x \to 2} \, \frac{1}{x} = \frac{1}{2} ; \\ \lim_{x \to 2} \left( (x-2)^2 \times \frac{x+1}{x-2} \right) &= \lim_{x \to 2} \, \left( x+1 \right) (x-2) = 0. \end{align*}So the finite singular point 𝑥=2 is regular.

That's not the end. We should also check the singularities at infinity. Because these also act as one of the key points of distinguishing the ODE type. Therefore, we make a transformation t = 1/x. Then the derivatives will have the form

\begin{align*} \frac{{\text d}y}{{\text d}x} &= \frac{{\text d}y}{{\text d}t} \, \frac{{\text d}t}{{\text d}x} = - \frac{1}{x^2} \, \frac{{\text d}y}{{\text d}t} = - t^2 \frac{{\text d}y}{{\text d}t} , \\ \frac{{\text d}^2 y}{{\text d}x^2} &= \frac{\text d}{{\text d}x} \left( -t^2 \frac{{\text d}y}{{\text d}t} \right) = \frac{\text d}{{\text d}t} \left( -t^2 \frac{{\text d}y}{{\text d}t} \right) \frac{{\text d}t}{{\text d}x} = t^4 \frac{{\text d}^2 y}{{\text d}t^2} + 2t^3 \frac{{\text d}y}{{\text d}t} \end{align*}Putting these quantities into the given equation, we obtain\[ t^4 \frac{{\text d}^2 y}{{\text d}t^2} + 2t^3 \frac{{\text d}y}{{\text d}t} - \frac{t^2}{1-2t} \, t^2 \frac{{\text d}y}{{\text d}t} + \frac{t+1}{1-2t}\, y = 0. \]Upon division by t4 and some simplification, we get\[ \frac{{\text d}^2 y}{{\text d}t^2} + \frac{2-t}{t(1-2t)} \, \frac{{\text d}y}{{\text d}t} + \frac{1}{t^4}\,\frac{t+1}{1-2t}\, y = 0. \]Finally, we check singularity at the origin by calculating the limits:\begin{align*} \lim_{t \to 0} \left( t \times \frac{2-t}{t(1-2t)} \right) = \lim_{t \to 0} \, \frac{2-t}{1-2t} = 0 , \\ \lim_{t \to 0} \left( t^2 \times \frac{1}{t^4}\,\frac{t+1}{1-2t} \right) = \lim_{t \to 0} \, \frac{1}{t^2}\,\frac{t+1}{1-2t} = \infty . \end{align*}Therefore, the singularities at 𝑥=±∞ are irregular. Hence, the given differential equation belongs to Heun's Confluent type ODE.

Example: We present some examples of differential equations having irregular singular points.

-

The differential equation

\( \displaystyle x^3 y'' + y =0 \) has a singular point at the origin. We rewrite the equation with isolated second derivative:

\( \displaystyle y'' + \frac{y}{x^3} = 0 . \)

Calculating the limit

\begin{align*} \lim_{x \to 0} x^2\,q(x) &= \lim_{x \to 0} x^2\,\frac{1}{x^3} = \lim_{x \to 0} \frac{1}{x} = \infty. \end{align*}Since this limit is not finite, the point x = 0 is a irregular singular point.

We check for singularity the infinite point, so we make substitution: t = 1/x. Then derivatives become

\begin{align*} \frac{{\text d}y}{{\text d}x} &= \frac{{\text d}y}{{\text d}t} \, \frac{{\text d}t}{{\text d}x} = - \frac{1}{x^2} \, \frac{{\text d}y}{{\text d}t} = - t^2 \frac{{\text d}y}{{\text d}t} , \\ \frac{{\text d}^2 y}{{\text d}x^2} &= \frac{\text d}{{\text d}x} \left( -t^2 \frac{{\text d}y}{{\text d}t} \right) = \frac{\text d}{{\text d}t} \left( -t^2 \frac{{\text d}y}{{\text d}t} \right) \frac{{\text d}t}{{\text d}x} = t^4 \frac{{\text d}^2 y}{{\text d}t^2} + 2t^3 \frac{{\text d}y}{{\text d}t} . \end{align*}For the new independent variable t, the differential equation becomes\[ \frac{1}{t^3} \left( t^4 \frac{{\text d}^2 y}{{\text d}t^2} + 2t^3 \frac{{\text d}y}{{\text d}t} \right) + y = 0, \]which is simplified to\[ t\,\frac{{\text d}^2 y}{{\text d}t^2} + 2\, \frac{{\text d}y}{{\text d}t} + y = 0 , \]for which the original is a regular singular point. therefore, we conclude that the given differential equation \( \displaystyle x^3 y'' + y =0 \) has a regular singular point at infinity. -

Consider the differential equation

\[ \frac{{\text d} y}{{\text d}x} + 2\, \frac{\sec^x}{\tan x}\,y = 3\,\sec^2 x , \qquad y(1) =0 . \]Singularities for this differential equation are caused by two functions: nulls of the tangent function, which are \( \displaystyle x = \frac{\pi}{2} + n\,\pi, \) and singularities of the cosecant function \( \displaystyle x = k\,\pi , \) where k,n = 0, ±1, ±2, ... . The initial conditions cannot be specified at singular points because we don't know whether the solution of such initial value problem exist (and unique). Since our initial condition y(1) = 0 is specified at x = 1, we expect the corresponding solution exists within the interval 0 < x < π/2 ≈ 1.5708.

The singular point x=0 is a regular singular point because tanx ∿ x as x → 0. On the other hand, the point x = π/2 is an irregular singular point for the given differential equation.

Second order differential equations

|

|

|

| Lazarus Immanuel Fuchs (1833-1902) | Ferdinand Georg Frobenius (1849--1917) |

We reformulate the definition of regular singular point for second order linear differential equation.

Example: Consider the differential equation

Example: Consider the Picard–Fuchs equation:

Example: The following differential equation \[ x \left( 1 - x^2 \right) y'' + \left( 1 - 3x^2 \right) y' -x\,y =0 \] had been studied both by Legendre (1825) and Kummer (1836) because it describes the periods of the complete elliptic integrals as functions of the modulus x. Kummer recognized this equation as reducible to the hypergeometric equation \[ x \left( 1 - x \right) y'' + \left[ \gamma - \left( \alpha + \beta + 1 \right) \right] y' - \alpha\beta\, y =0. \] The Legendre--Kummer differential equation has three regular singular points at x = 0 and x = ±1. ■

Initial conditions at a singular point

A Swiss astrophysicist and meteorologist Robert Emden (1862--1940) posted in the early 1900's the following problem:

Determine the first point on the positive x-axis where the solution to the initial value problemHis problem shows that sometimes a physical situation requires to consider the initial value problems with conditions imposed at the singular point. We will show later that the second initial conditions for the derivative \( y' (0) = 0 \) cannot be chosen arbitrary and actually not needed because it is satisfied automatically.\[ x\,y'' + 2\,y' + x\,y(x) = 0 , \qquad y(0) = 1, \quad y' (0) = 0 , \]is zero.

Example: Let us consider the Euler equation \[ x^2 y'' + x\,y' + y =0 . \] It has the general solution \[ y(x) = C_1 x + C_2 x^{-1} , \] with some arbitrary constants C1 and C2. Suppose we are given the initial condition y(0) = 1. To satisfy it, we have to eliminate C2 = 0 because x-1 is undefined at the origin. However, it would not help since with any choice of C1 the initial condition cannot be reached. So our initial value problem has no solution.

Now suppose that the initial condition is homogeneous, so y(0) = 0. Then the equation has the general solution \[ y(x) = C_1 x , \] for any choice of constant C1. Adding another condition, we get the initial value problem \[ x^2 y'' + x\,y' + y =0 , \qquad y(0) = 0 , \quad y' (0) = a. \] It has infinite many solution, y(x) = C1 x, only when 𝑎 = 0 and no solution with any other second initial condition.

Let us consider another Euler equation subject to one initial condition \[ x^2 y'' + 2x\,y' = 0 \qquad \Longleftrightarrow \qquad \frac{{\text d}^2 y}{{\text d} x^2} + \frac{2}{x}\,\frac{{\text d} y}{{\text d} x} = 0 , \qquad y(0) = 1. \] The general solution of the given Euler equation is \[ y(x) = C_1 + C_2 x^{-1} , \] with some arbitrary constants C1 and C2. To satisfy this single initial condition, we have to set C1 = 1 and C2 = 0. This yields the constant solution \[ y(x) = 1. \] Any other second initial condition is either redundant (because it will be satisfied automatically if you set the derivative to be zero at x = 0) or leads to no solution. ■

Summary

- If x = 0 is an ordinary point of equation \eqref{Eqsingular.3}, then we may assume that p(x) and q(x) have the known Maclaurin expansions \begin{equation} \label{Eqsingular.6} p(x) = \sum_{n\ge 0} p_n x^n , \qquad q(x) = \sum_{n\ge 0} q_n x^n , \end{equation} in the region |x| < ρ, where ρ represents the minimum of the radii of convergence of the two series in formulas \eqref{Eqsingular.6}. In this case, equation \eqref{Eqsingular.3} will have two linearly independent solutions of the form \begin{equation} \label{Eqsingular.7} y(x) = \sum_{n=0}^{\infty} c_n x^n . \end{equation}

-

Alternately, if x = 0 is a regular singular point of equation \eqref{Eqsingular.3}, then we may assume that p(x) and q(x) have

the known expansions

\begin{equation}

p(x) = \sum_{n=-1}^{\infty} p_n x^n , \qquad

q(x) = \sum_{n=-2}^{\infty} q_n x^n ,

\label{Eqsingular.8}

\end{equation}

in the region |x| < ρ. A solution to the homogeneous equation \eqref{Eqsingular.3} with regular singular point at the origin is assumed to have a generalized power series expansion

\begin{equation} \label{Eqsingular.9}

y(x) = x^{\alpha} \sum_{n\ge 0} c_n x^n .

\end{equation}

Upon substituting the solution series \eqref{Eqsingular.9} and coefficient series \eqref{Eqsingular.8} into the given differential equation \eqref{Eqsingular.3}, we need to determine the roots

of the indicial equation

\begin{equation} \label{Eqsingular.10}

\alpha^2 + \alpha \left( p_{-1}-1\right) + q_{-2} = 0,

\end{equation}

This equation is obtained by using \( y=x^{\alpha} \) in equation \eqref{Eqsingular.3}, along with the expansions from \eqref{Eqsingular.9} and \eqref{Eqsingular.8}

and then determining the coefficient of the lowest

order term. The two roots of this equation, α1, α2,

are called the exponents of the singularity. There are several

sub-cases, depending on the values of the exponents of the

singularity. Assuming that they are real:

- If α1 ≠ α2 and α1 - α2 is not equal to an integer, then equation \eqref{Eqsingular.3} will have two linearly independent solutions in the forms \begin{equation} \label{Eqsingular.11} \begin{split} y_1(x)&= |x|^{\alpha_1}\left( 1+\sum_{n=1}^{\infty}b_n x^n \right) , \\ y_2(x)&= |x|^{\alpha_2}\left( 1+\sum_{n=1}^{\infty}c_n x^n \right) . \end{split} \end{equation}

- If α1 = α2, then (calling α = α1 = α2) equation \eqref{Eqsingular.3} will have two linearly independent solutions of the form \begin{equation} \label{Eqsingular.12} \begin{split} y_1(x)&= |x|^{\alpha}\left( 1+\sum_{n=1}^{\infty}d_nx^n \right) , \\ y_2(x)&= y_1(x)\ln |x| + |x|^{\alpha}\sum_{n=0}^{\infty}e_nx^n. \end{split} \end{equation}

- If α1 = α2 + M,where M is an integer greater than 0, then equation \eqref{Eqsingular.3} will have two linearly independent solutions of the form \begin{equation} \label{Eqsingular.13} \begin{split} y_1(x)&= |x|^{\alpha_1}\left( 1+\sum_{n=1}^{\infty}f_nx^n \right) , \\ y_2(x)&= hy_1(x)\ln | x| + |x|^{\alpha_2}\sum_{n=0}^{\infty} g_nx^n, \end{split} \end{equation} where the parameter h may be equal to zero.

- If the α1 and α2 are distinct complex conjugate numbers, then a solution can be found in the form \begin{equation} \label{Eqsingular.14} y(x) = x^{\alpha_1} \sum_{n=0}^{\infty} h_n x^n , \end{equation} where the { hn } are complex. In this case two linearly independent solutions are the real and imaginary parts of y(x). That is, y1(x) = Re y(x), and y2(x) = Im y(x).

- Birkhoff, G.D., Singular Points of Ordinary Linear Differential Equations, Transactions of the American Mathematical Society, 1909, Vol. 10, No. 4 (Oct., 1909), pp. 436-470 (35 pages)

- Dettman, J.W., Power Series Solutions of Ordinary Differential Equations, The American Mathematical Monthly, 1967, Vol. 74, No. 3, pp. 428--430.

- Frobenius, F.G., Ueber die Integration der linearen Differentialgleichungen durch Reihen (in German; its English translation: On the integration of linear differential equations by means of series), Journal für die reine und angewandte Mathematik, 1873, 76, pp. 214--235.

- Fuchs, L., Zur Theorie der linearen Differentialgleichungen mit veränderlichen Coefficienten (in German; its English translation: On the theory of linear differential equations with variable coefficients), Journal für die reine und angewandte Mathematik, 1866, 66, pp. 121--160.

- Gray, J.J., Fuchs and the theory of differential equations, Bulletin of the American Mathematical Society, 1984, Volume 10, Number 1, pp. 1--26.

- Grigorieva, E., Methods of Solving Sequence and Series Problems, Birkhäuser; 1st ed. 2016.

- Kreshchuk, M., Gulden, T., The Picard–Fuchs equation in classical and quantum physics: application to higher-order WKB method, Journal of Physics A: Mathematical and Theoretical, 2019, Volume 52, Number 15, doi: 10.1088/1751-8121/aaf272

- Motsa, S.S., and Sibanda, P., Anew algorithm for solving singular IVPs ofLane-Emden type, Latest Trends on Applied Mathematics, Simulation Modeling, 2010,

- Yiğider, M., Tabatabaei, K., and Çelik, E., The Numerical Method for Solving Differential Equations of Lane-Emden Type by Padé Approximation, Discrete Dynamics in Nature and Society, Volume 2011, Article ID 479396, 9 pages http://dx.doi.org/10.1155/2011/479396

Return to Mathematica page

Return to the main page (APMA0330)

Return to the Part 1 (Plotting)

Return to the Part 2 (First Order ODEs)

Return to the Part 3 (Numerical Methods)

Return to the Part 4 (Second and Higher Order ODEs)

Return to the Part 5 (Series and Recurrences)

Return to the Part 6 (Laplace Transform)

Return to the Part 7 (Boundary Value Problems)